Usable Data Mask

Overview

Clouds, aerosols, and other surface conditions can impact the radiometry and usability of Earth observation data. To improve the consistency of data analysis of satellite imagery for research or commercial applications, Planet provides the Usable Data Mask (UDM) to accurately classify these phenomena in every image published in the Planet data catalog.

UDM classifies each pixel in an image as Clear, Cloud, Haze, Cloud Shadow or Snow, allowing users to determine which pixels are usable for their analysis. UDM2.1 is the latest version developed by Planet, provided in multiband-band GeoTIFF format, with each band corresponding to a different semantic class and two additional bands offering supplementary information.

Archive Availability

Usable Data Masks are available globally for PlanetScope imagery beginning in August, 2018. In specific agricultural regions, UDMs are available from January 2018. A small percentage of PlanetScope imagery after August 2018 will not include the UDM2 asset due to rectification or image processing failures.

Usable Data Masks are available for the SkySat imagery beginning from 2017. In rare instances, a UDM2 asset may not be available for specific SkySat items.

As of November 29, 2023 all PlanetScope and SkySat imagery will be processed by UDM2.1. Any imagery acquired prior to November 29, 2023 were processed by UDM2.0.

Key differences between UDM2.1 and UDM2.0

Planet published a new Usable Data Mask version (UDM2.1) in November, 2023. UDM2.1 has significantly improved classification accuracy compared to previous versions of the product. In addition, there are some key differences between the UDM versions:

- The classification schema for UDM was modified for UDM2.1 to contain a single Haze class, producing a more consistent, accurate, and understood product.

- The Heavy Haze class from UDM2.0 has been deprecated in UDM2.1. This class had lower predictive accuracy, and was commonly misclassified with the Cloud and Light Haze classes.

- A new dataset of ground truth images was labelled to train new models with the updated classification schema. See Methodology for more detail.

- The Cloud class is defined as regions of a scene containing opaque atmospheric interference; objects on the ground cannot be seen through Cloud. Alternatively, Haze is defined as any interference that can be seen through to the ground.

- In some instances, the Near-Infrared (NIR) band is required to distinguish between Cloud and Haze, due to the "atmospheric window" of infrared wavelengths. As a result, Haze classifications in UDM will occasionally appear opaque in a Visual product, but are transparent when viewed as a false color image with the NIR band.

The formatting has not changed between UDM2.0 to UDM2.1; the product still contains 8-bands for backwards compatibility. The prior Light Haze band (band 4) now contains the UDM2.1 Haze classification values. The values for the Heavy Haze band (band 5) and the heavy_haze_percent metadata will always be 0 for UDM2.1.

UDM2.1 product bands

The UDM2.1 asset is delivered as a 8-band GeoTIFF file, with the following bands and values:

| Band | Class | Pixel Value Range | Description |

|---|---|---|---|

| 1 | Clear | 0,1 | Regions of a scene that are free of cloud, haze, cloud shadow and/or snow. |

| 2 | Snow | 0,1 | Regions of a scene that are covered with snow or ice. |

| 3 | Cloud Shadow | 0,1 | Shadows caused by clouds or haze and not by mountains, buildings, or other terrain features. |

| 4 | Light Haze | 0,1 | Regions of a scene with thin, filamentous clouds, soot, dust, and smoke. You can see ground objects through haze. |

| 5 | Heavy Haze | 0,1 | UDM2.1 does not support a heavy haze class, but this class name persists to support functional backwards compatibility with UDM2.0. Pixels will never be classified as Heavy Haze with UDM2.1. |

| 6 | Cloud | 0,1 | Regions of a scene that contain opaque clouds. You cannot see ground objects through clouds. |

| 7 | Confidence | 0-100 | Pixel-wise model prediction probability score. This is an estimate of how confident the model is that a pixel classification is correct. |

| 8 | Unusable Pixels | 0-255 | Equivalent to the UDM1 asset (Bitwise-encoded). The bits are as follows. Bit 0: Blackfill; Bit 1: Likely cloud; Bit 2: Blue (Band 2) is anomalous; Bit 3: Green (Band 4) is anomalous; Bit 4: Red (Band 6) is anomalous; Bit 5: Red Edge (Band 7) is anomalous; Bit 6: NIR (Band 8) is anomalous; Bit 7: Coastal Aerosol (Band 1) and/or Green-I (Band 3) and/or Yellow (Band 5) is anomalous. See Planet's Imagery Specification for complete details. |

Model confidence

The UDM2.1 classification model outputs a prediction probability score for each pixel in the image, estimating confidence or certainty that a pixel classification is correct.

The confidence score can be used to define thresholds as to whether to use a given pixel’s classification. For example, if you want to increase the likelihood that all Cloud pixels in an image are masked, you can use a low confidence value to threshold all pixels classified in the Cloud class. Alternatively, a higher confidence threshold could be set for all Clear pixels, increasing the likelihood that usable pixels are correctly classified prior to an analysis.

There will be times where the model confidence for a given classification is high, but the prediction is still incorrect. For example, a pixel could be classified with high confidence as Clear, when in fact it is Cloudy. In these particular cases, we may need additional training data to improve the model accuracy under these conditions. Users are encouraged to report any issues to their Customer Support Representative so Planet can continue to improve the product.

Metadata fields

These metadata fields are based on the UDM product, and can be used to construct filters when searching for Planet imagery. To learn more, refer to the Planet help page Search Filters.

| Field | Type | Value Range | Description |

|---|---|---|---|

| clear_percent | int | [0-100] | Percent of clear values in the image. Clear values represents Regions of a scene that are free of cloud, haze, cloud shadow and/or snow |

| clear_confidence_percent | int | [0-100] | percentage value: aggregated algorithmic confidence in 'clear' pixel classifications |

| cloud_percent | int | [0-100] | Percent of cloud values in the image. Cloud values represent regions of a scene that contain thick clouds. You cannot see ground objects through clouds. |

| heavy_haze_percent | int | [0-100] | Note: UDM2.1 does not include a heavy haze classification. Any non-zero values for this field were generated by the UDM2.0 product. For UDM2.0: Percent of heavy haze values in the image. Heavy haze values represent scene content areas that contain thin low altitude clouds, higher altitude cirrus clouds, soot and dust which allow fair recognition of land cover features, but not having reliable interpretation of the radiometry or surface reflectance. |

| light_haze_percent | int | [0-100] | Percent of haze values in the image. Haze values represent regions of a scene with thin, filamentous clouds, soot, dust, and smoke. You can see ground objects through haze. |

| shadow_percent | int | [0-100] | Percent of cloud shadow values in the image. Shadow values represent regions of the scene that are covered by shadows caused by clouds. |

| snow_ice_percent | int | [0-100] | Percent of snow and ice values in the image. Snow_ice values represent regions of the scene that are hidden below snow and/or ice. |

| visible_percent | int | [0-100] | Visible values represent the fraction of the scene content (excluding the portion of the image which contains blackfill) which comprises clear, light haze, shadow, snow/ice categories, and is given as a percentage ranging from zero to one hundred. |

| visible_confidence_percent | int | [0-100] | Average of confidence percent for clear_percent, light_haze_percent, shadow_percent and snow_ice_percent |

* Blackfill content refers to empty regions of a scene file that have no value

UDM2.1 classification methodology

Planet’s UDM2.1 classification approach is based on supervised machine learning techniques that use observation data from Planet satellites to train a classification model.

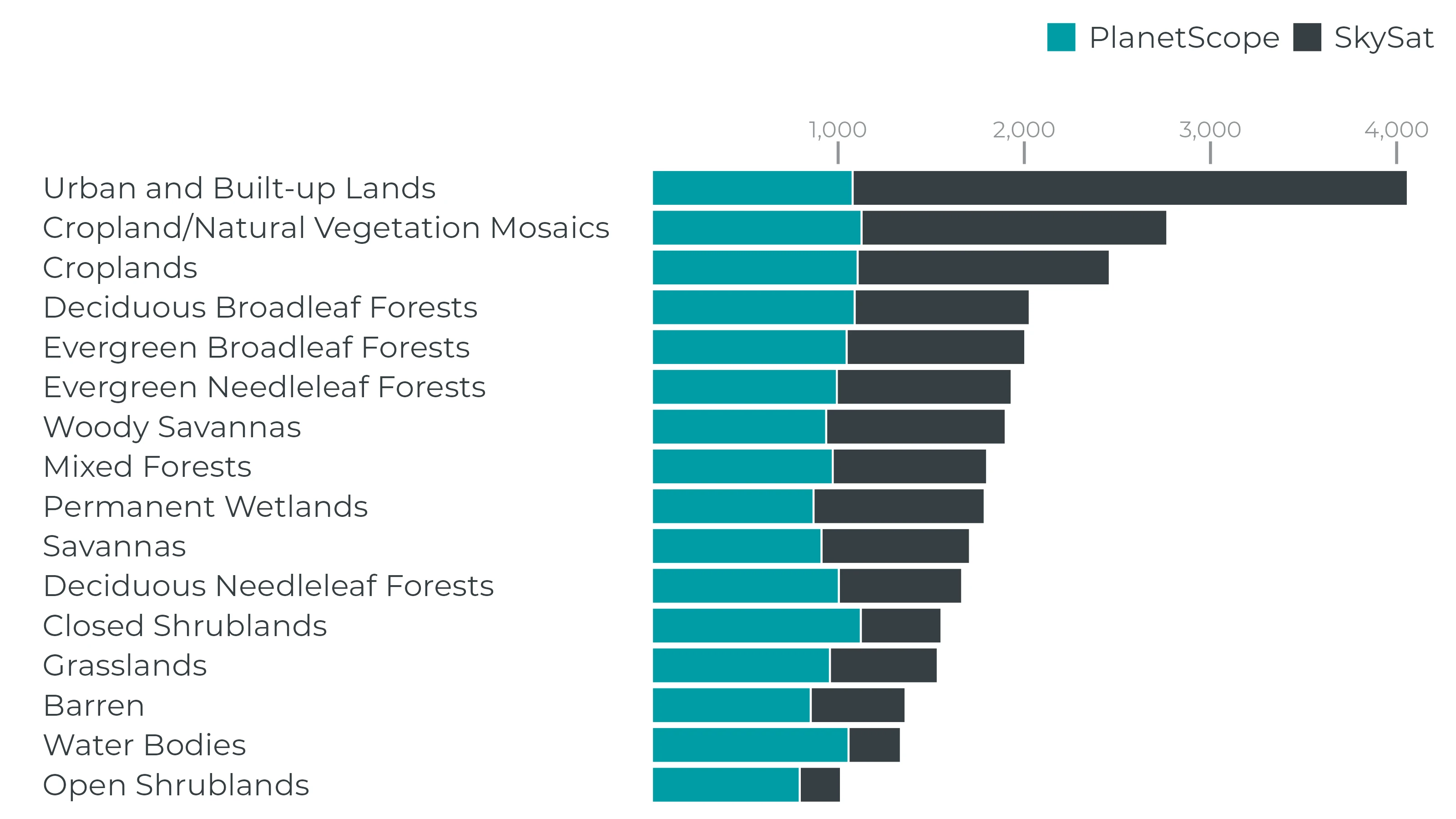

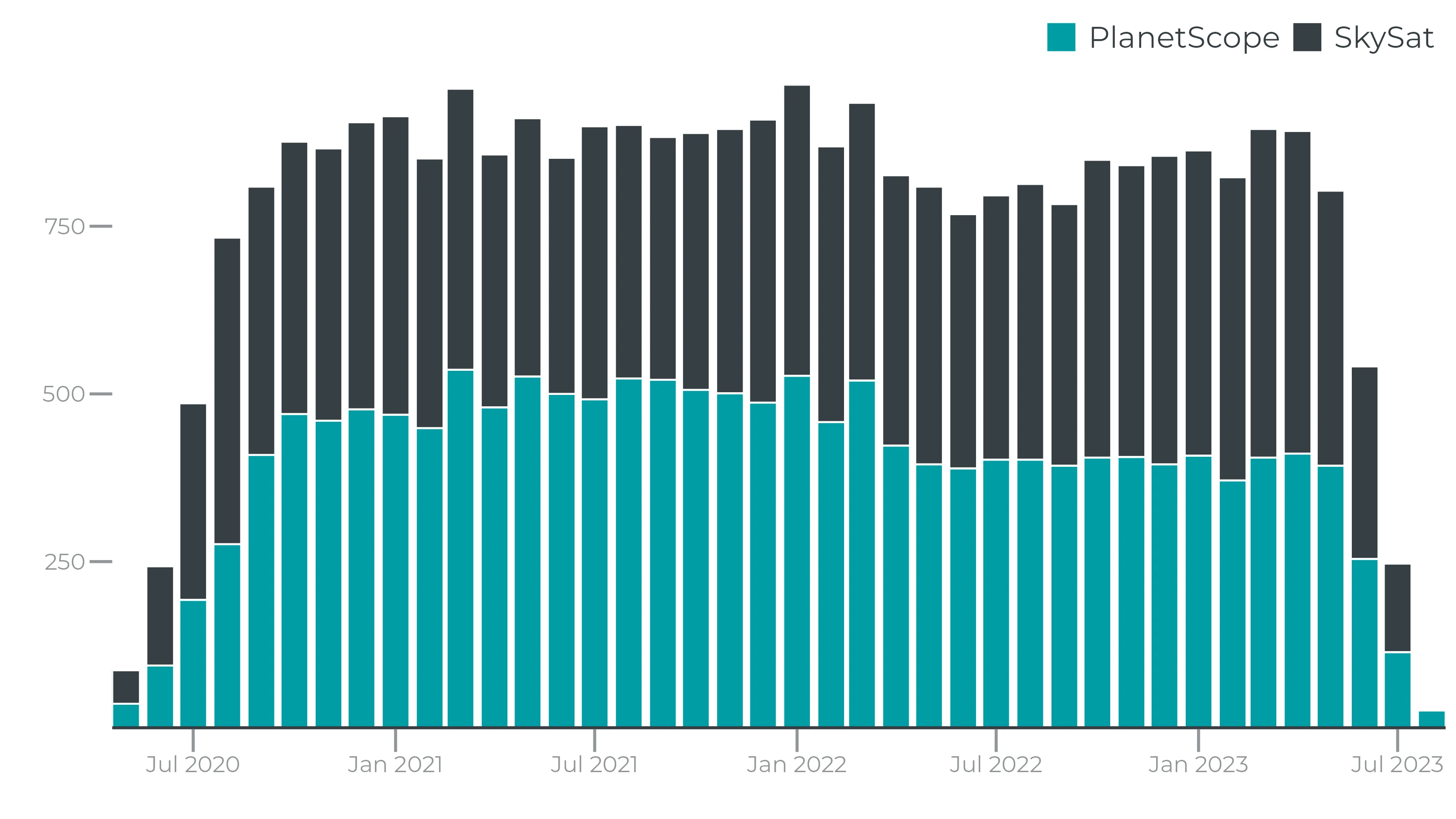

The architecture for UDM2.1 is a modified UNET, a deep learning supervised segmentation model, that runs on 4-band (RGB-NIR) top-of-atmosphere radiance imagery. A diverse corpus containing tens of thousands of PlanetScope and Skysat images were hand-labeled to mark each pixel into one of the 5 classes noted above. The model was then trained using this labeled dataset so that it can classify new imagery into those same 5 classes.

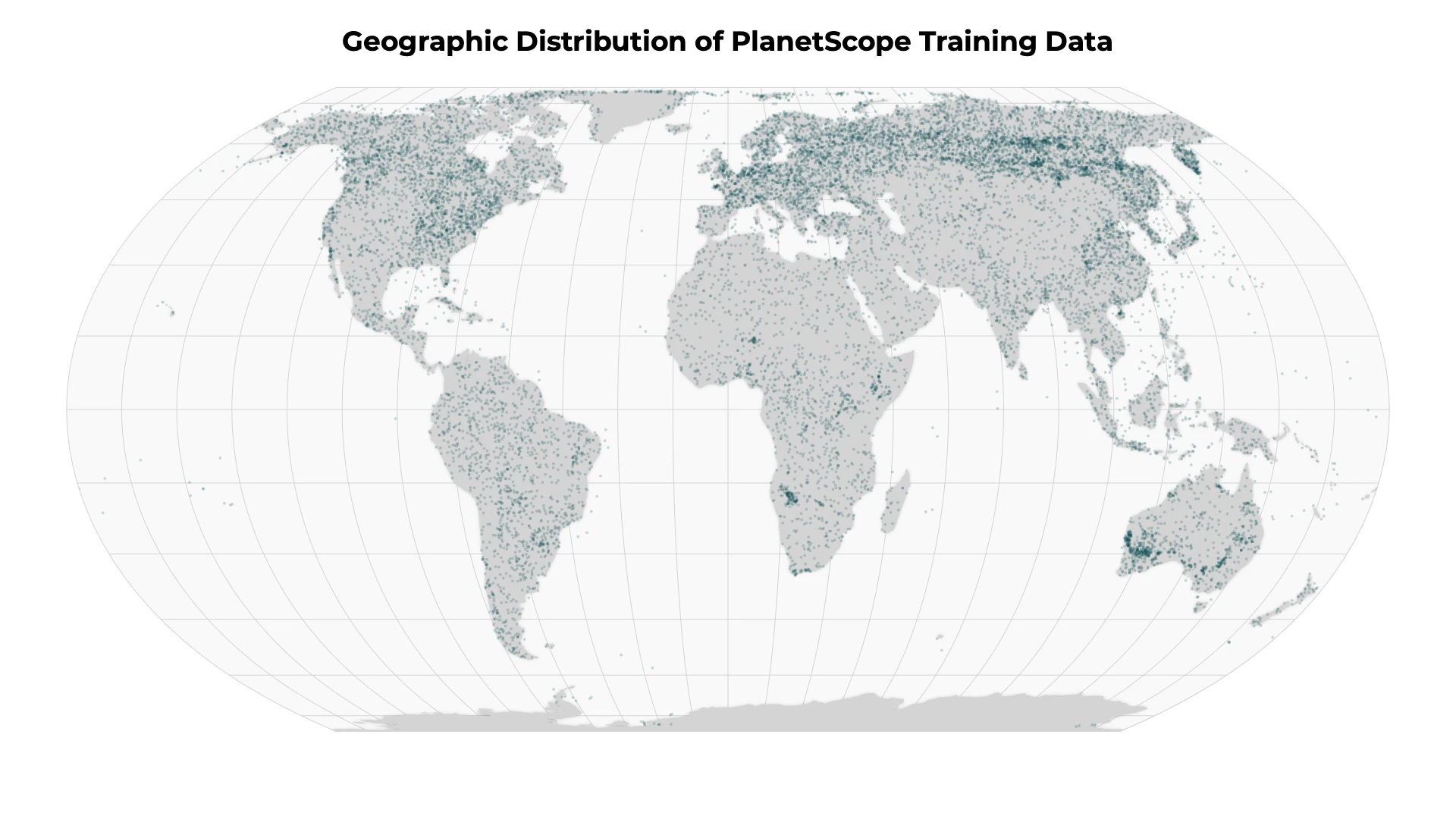

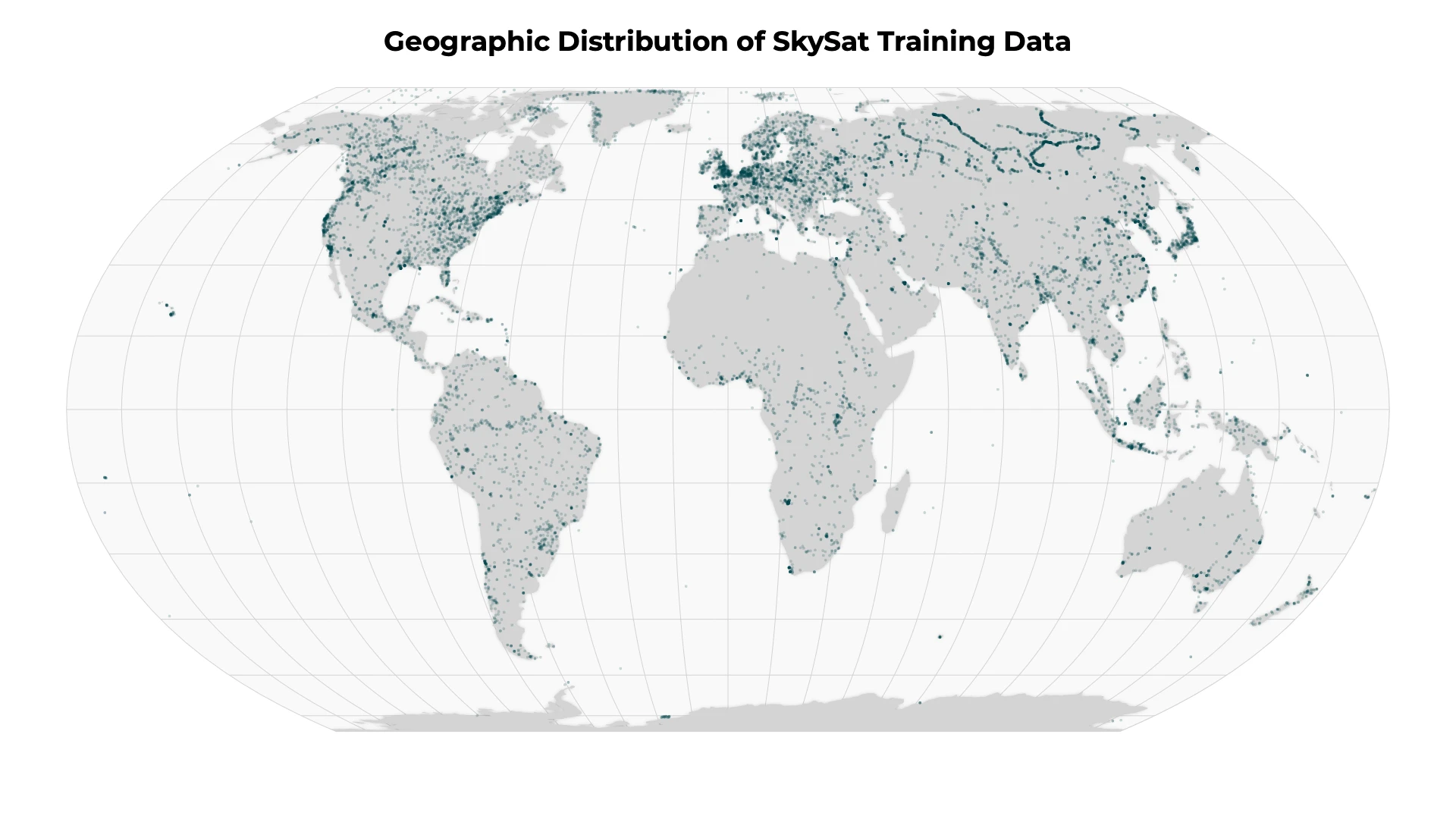

To ensure that the truth scenes dataset are representative of the global catalog of Planet images, Planet draws from a diversity of satellites, scene content, seasonality, geography and cloud types to contribute to the truth scene curation and classification process.

Model Accuracy

Planet evaluates the UDM by comparing model predictions to a sample of held-out labeled data called a validation dataset or ground truth dataset. We perform a quantiative evaluation of model accuracy by computing precision, recall and F1 metrics on a per class basis. For our validation datasets of thousands of images, we observed the following F1, precision and recall metrics:

PlanetScope

| Class | F1 | Precision | Recall |

|---|---|---|---|

| Clear | 0.909 | 0.871 | 0.951 |

| Snow | 0.770 | 0.777 | 0.763 |

| Cloud Shadow | 0.583 | 0.579 | 0.588 |

| Light Haze | 0.592 | 0.563 | 0.623 |

| Heavy Haze | n/a | n/a | n/a |

| Cloud | 0.945 | 0.929 | 0.961 |

SkySat

| Class | F1 | Precision | Recall |

|---|---|---|---|

| Clear | 0.848 | 0.816 | 0.883 |

| Snow | 0.803 | 0.780 | 0.87 |

| Cloud Shadow | 0.442 | 0.391 | 0.508 |

| Light Haze | 0.619 | 0.581 | 0.662 |

| Heavy Haze | n/a | n/a | n/a |

| Cloud | 0.924 | 0.905 | 0.944 |

Planet engineering teams regularly reviews cloud masks and identifies new scenes and add to our ground truth datasets. Additionally, your reports of poorly performing scenes or regions are part of the feedback process to improve our training data and model performance.

Planet publishes imagery globally. Our goal is to ensure the highest quality in our model accuracy, but there can be anomalous behavior. If you observe poor quality, please contact your Customer Support Representative so Planet can address these issues with quarterly iterations of the model.