SkySat

SkySat, operated by Planet, is a high resolution constellation of 15 satellites, able to image revisit any location on Earth up to 10x daily (a daily collection capacity of 4,000 km2/day). SkySat supports the Planet high resolution tasking, which allows you to choose when and where to capture SkySat imagery. SkySat produces 4-band and panchromatic imagery and is sampled at 50 centimeters per pixel when orthorectified.

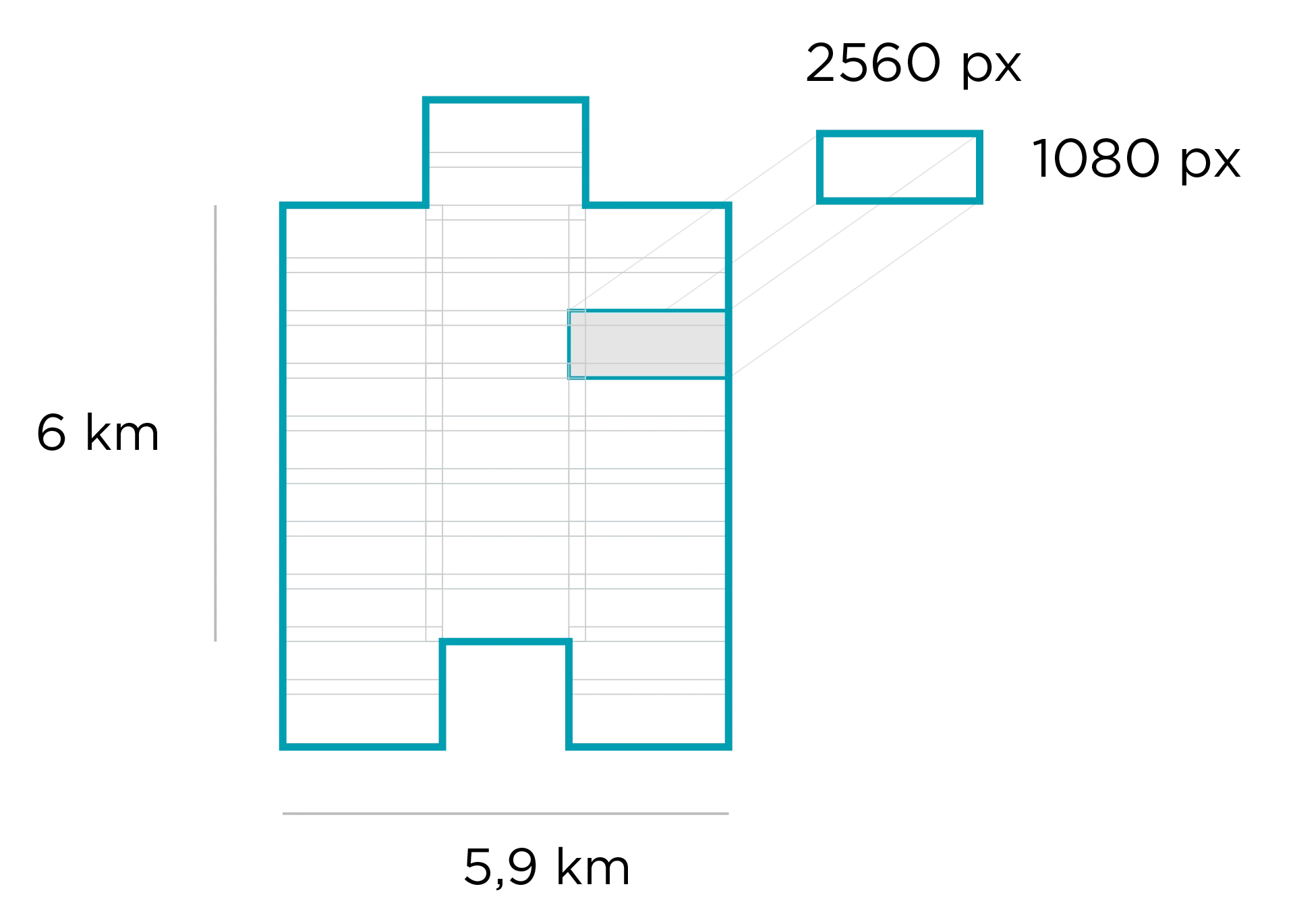

The SkySat satellite constellation consists of multiple launches of the Planet SkySat-C generation satellites, first launched in 2016. Each satellite is 3-axis stabilized and agile enough to slew between different targets of interest. Each satellite has four thrusters for orbital control, along with four reaction wheels and three magnetic torquers for attitude control. Currently, the SkySat constellation consists of morning and afternoon sun-synchronous satellites with an operational altitude of 475 km. The minimum and maximum latitude coverage is up to ±80°, but depends on season. SkySat collects approximately a 6 km swath.

All SkySat sattellites contain Cassegrain telescopes with a focal length of 3.6 m, with three overlapping 5.5 megapixel CMOS imaging detectors making up the focal plane. The central detector is slightly offset from the side detectors, giving in SkySat imagery it's unique "tuning fork" outline. SkySat can collect frame imagery, stereo imagery, and video in day or night collects. SkySat images are typically collected in short exposures, coregistered, and stacked to improve the SNR and GSD with super-resolution. SkySat collects RGB, NIR and panchromatic imagery.

| Band | Name | Wavelength |

|---|---|---|

| 1 | Blue | 450 - 515 nm |

| 2 | Green | 515 - 595 nm |

| 3 | Red | 605 - 695 nm |

| 4 | Near IR | 740 - 900 nm |

| NA | Panchromatic | 450 - 900 nm |

SkySat Imagery Products

SkySat imagery products are available for search, ordering, and download via Planet's APIs, User Interfaces, and Integrations. Planet offers several item types for SkySat imagery. Small AOIs that are covered by a single SkySat frame image may be ordered as SkySatScene item. Larger strips are available as coregistered, stacked, mosaiced scenes with the SkySatCollect item. Videos are delivered with the SkySatVideo item. For SkySatScene and SkySatCollect, different levels of image processing for the final product can be specified via the Asset type. More information on Planet Item and Asset types is available here.

SkySat Item Types

A SkySat Scene Product is an individual framed scene within a strip, captured by the satellite in its line-scan of the Earth. SkySat Satellites have three cameras per satellite, which capture three overlapping strips. Each of these strips contain overlapping scenes, not organized to any particular tiling grid system.

SkySat Scene products are approximately 1 x 2.5 sq km in size and are represented in the Planet Platform as the SkySatScene item type. You can find an image that covers an area of interest larger than a SkySat Scene can order a SkySat Collect Product.

A SkySat Collect Product is created by composing many SkySat Scenes along an imaging strip into an orthorectified segment. Collects are often composed of 60 or more scenes and will be at least 5 km long. They are represented in the Planet Platform as the SkySatCollect item type. This product may be easier to handle, if you're looking at larger areas of interest with SkySat imagery. Due to the image rectification process involved in creating this product, Collect is generally recommended over the Scene product when the AOI spans multiple scenes, particularly if a mosaic or composite image of the individual scenes is required. Collect performs necessary rectification steps automatically. This is especially useful for users who don't feel comfortable doing orthorectification manually.

A SkySat Video Product is a full motion video are collected between 30 and 120 seconds by a single camera from any of the SkySat satellites. Its size is comparable to a SkySat Scene, about 1 x 2.5 square kilometers. They are represented in the Planet Platform as the SkySatVideo item type.

SkySat Scene and Collect

SkySat Imagery Asset Types

SkySat Scene and Collect products are available for download in the form of imagery assets. Multiple asset types are available for Scene and Collect products, each with differences in radiometric processing and/or rectification.

Asset Type availability varies by Item Type. Further details on the supported assets by item type are indicated on each Item Types' documentation site.

Basic L1A Panchromatic (basic_l1a_panchromatic_d) assets are

non-orthorectified, uncalibrated, panchromatic-only imagery products with native

sensor resolution. They are typically ready two hours after capture and before

all other SkySat asset types are available in the catalog. These products are

designed for time-sensitive, low-latency monitoring applications. They can be

geometrically corrected with associated rational polynomial coefficients (RPCs)

assets derived from satellite telemetry.

Basic Analytic (basic_analytic) assets are

non-orthorectified, calibrated, multispectral imagery products with native

sensor resolution, that have been transformed to Top of Atmosphere (at-sensor)

radiance. The multispectral bands have been co-registered to each other and to

the ground. Rational polynomial coefficients (RPCs) assets (ground control

applied) are provided for users who wish to geometrically correct the data

themselves. This product is designed for data science and analytic applications.

Basic Panchromatic (basic_panchromatic) assets are

non-orthorectified, calibrated, super-resolved, panchromatic-only imagery

products that have been transformed to Top of Atmosphere (at-sensor) radiance.

Rational Polynomial coefficients (RPCs) assets (ground control applied) are

provided if you want to geometrically correct the data. A basic panchromatic

image is coregistered with the associated basic analytic image. This product is

designed for data science and analytic applications which depend on a wider

spectral range (Pan: 450 - 900 nm).

Ortho Analytic (ortho_analytic) assets are orthorectified,

calibrated, multispectral imagery products with native sensor resolution that

have been transformed to Top of Atmosphere (at-sensor) radiance. These products

are designed for data science and analytic applications which require imagery

with accurate geolocation and cartographic projection.

Ortho Analytic Surface Reflectance (ortho_analytic_sr)

assets are corrected for the effects of the Earth's atmosphere, accounting for

the molecular composition and variation with altitude along with aerosol

content. Combining the use of standard atmospheric models with the use of MODIS

water vapor, ozone and aerosol data, this provides reliable and consistent

surface reflectance scenes over Planet's varied constellation of satellites as

part of our normal, on-demand data pipeline.

Ortho Panchromatic (ortho_panchromatic) assets are

orthorectified, calibrated, super-resolved, panchromatic-only imagery products

that have been transformed to Top of Atmosphere (at-sensor) radiance. Final

image products are sampled at 50 cm. These products are designed for data

science and analytic applications which require a wider spectral range (Pan: 450

- 900 nm), highest available resolution, and accurate geolocation and cartographic projection.

Ortho Pansharpened (ortho_pansharpened) assets are

orthorectified, uncalibrated, super-resolved, multispectral imagery products.

Lower resolution multispectral bands are sharpened to match the resolution of

the super-resolved panchromatic band. Final image products are sampled at 50 cm

resolution. These products are designed for multispectral applications which

require highest available resolution and accurate geolocation and cartographic

projection.

Ortho Visual (ortho_visual) assets are orthorectified,

color-corrected, super-resolved, RGB imagery products. Color corrections are

appliet that are optimized for the human eye, providing images as they would

look if viewed from the perspective of the satellite. Lower resolution

multispectral bands are sharpened by the super-resolved panchromatic band. Final

image products are sampled at 50 cm resolution. These products are designed for

simple and direct visual inspection, and can be used and ingested directly into

a Geographic Information System or application.

Additional details about formatting, calibration, and data scheme are available in the Imagery Product Specification document. Planet customers can review our quarterly SkySat image reports here.

SkySat Video Asset Types

Download the SkySat Video for an overview of supported assets by SkySatVideo item type here.

Video File (video_file) assets are video mp4 files, produced

with Basic L1a Panchromatic scene assets captured as part of the full-motion

video. Currently, no stabilization is applied to video.

Video Frames (video_frames) assets are compressed folders

which include all of the frames used to create the Video File, packaged as Basic

L1a Panchromatic scene assets with accompanying rational polynomial coefficients

(RPCs). These products are designed primarily for customers interested in using

video frames for 3D reconstruction.

Product Naming

The name of each acquired SkySat image is is unique and allows for easier recognition and sorting of the imagery. Currently, it includes the date and approximate time of capture, as well as the satellite id that captured it. The name of each downloaded image product is composed of the following elements:

SkySatScene

{acquisition date}_{acquisition time}_{satellite_id}{camera_id}_{frame_id}_{asset}.{extension}

Example: 20200814_162132_ssc4d3_0021_analytic.tif

SkySat Collect

{acquisition date}_{acquisition time}_{satellite_id}_{frame_id}_{bandProduct}.{extension}

Example: 20200815_091045_ssc6_u0002_visual.tif

SkySat Video

{acquisition date}_{acquisition time}_{satellite_id}{camera_id}_video.mp4

Example: 20200808_133717_ssc3d1_video.mp4

Processing

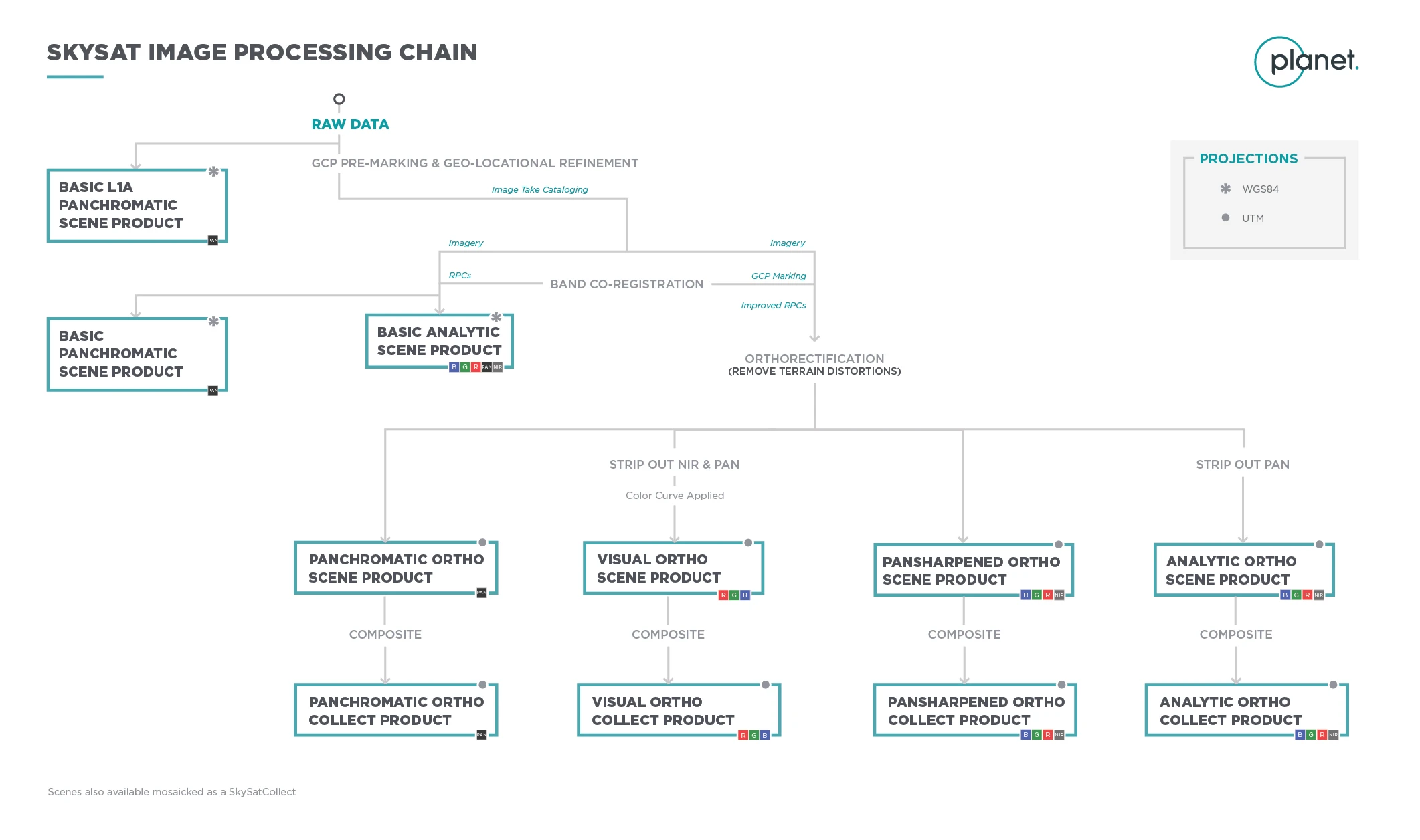

Several processing steps are applied to SkySat imagery to produce the set of data products available for download.

SkySat Processing

Sensor & Radiometric Calibration

Darkfield/Offset Correction: Corrects for sensor bias and dark noise. Master offset tables are created by averaging on-orbit darkfield collects across 5-10 degree temperature bins and applied to scenes during processing based on the CCD temperature at acquisition time.

Flat Field Correction: Flat fields are collected for each optical instrument prior to launch. These fields are used to correct image lighting and CCD element effects to match the optimal response area of the sensor. Flat fields are routinely updated on-orbit during the satellite lifetime.

Camera Acquisition Parameter Correction: Determines a common radiometric response for each image (regardless of exposure time, number of TDI stages, gain, camera temperature and other camera parameters).

Inter-Sensor Radiometric Response (Intra-Camera): Cross calibrates the 3 sensors in each camera to a common relative radiometric response. The offsets between each sensor is derived using on-orbit cloud flats and the overlap regions between sensors on SkySat spacecraft.

Super Resolution (Level 1B Processing): Super resolution is the process of creating an improved resolution image by fusing information from low resolution images, with the created higher resolution image being a better description of the scene.

Orthorectification

Removes terrain distortions. This process consists of two steps:

-

The rectification tiedown process wherein tie points are identified across the source images and a collection of reference images (ALOS, NAIP, Landsat) and RPCs are generated.

-

The actual orthorectification of the scenes using the RPCs, to remove terrain distortions. The terrain model used for the orthorectification process is derived from multiple sources (Intermap, NED, SRTM and other local elevation datasets) which are periodically updated. Snapshots of the elevation datasets used are archived (helps in identifying the DEM that was used for any given scene at any given point).

Visual Product Processing

Presents the imagery as natural color, optimized as seen by the human eye. This process consists of three steps:

-

Nominalization - Sun angle correction, to account for differences in latitude and time of acquisition. This makes the imagery appear to look like it was acquired at the same sun angle by converting the exposure time to the nominal time (noon).

-

Unsharp mask (sharpening filter) applied before the warp process.

-

Custom color curve applied post warping.

API Access Information

| API | Available | Notes |

|---|---|---|

| Data API | ✅ | |

| Orders API | ✅ | See orders docs and bundle reference for more information |

| Subscriptions API | ✅ |