Pelican Overview

Constellation & Sensor Overview

Pelican, operated by Planet, is a next-generation high-resolution constellation designed to support rapid tasking, improved image quality, and low-latency delivery. Pelican produces multispectral and panchromatic imagery and is sampled at 50 centimeters per pixel when orthorectified.

The Pelican satellite constellation consists of multiple launches of Generation-1 Pelican spacecraft, beginning commercial operations in 2025. Each satellite is 3-axis stabilized and highly agile, capable of slewing rapidly between targets of interest. Pelican satellites include an electric propulsion unit for orbital control, along with four reaction wheels and three magnetic torquers for attitude control. Generation-2 spacecraft are anticipated to begin operations in 2026.

Pelican supports multiple collection modes including point, line, area, and developing stereo capabilities. Pelican captures imagery as continuous strips that are segmented into framed Scenes. Pelican Gen-1 collects an 8 km swath at nadir.

Pelican Gen-1 satellites contain high-performance Cassegrain telescopes with an effective focal length of 4.03 meters, paired with a line-scan detector system consisting of dual panchromatic sensor arrays (12,288 pixels) and six multispectral bands (6,144 pixels). The panchromatic sensors are diagonally offset by half a pixel, enabling oversampling used in advanced processing and pansharpening. Pelican collects imagery in 6 multispectral bands alongside the panchromatic channel.

Pelican imagery is captured using Time Delay Integration (TDI), enabling high SNR at high resolution. The multispectral pixels have a larger native sample distance, while the panchromatic band provides finer detail for pansharpened and visual products. Pelican collects frame imagery in all tasking modes and is engineered for fast downlink and rapid product availability.

Pelican Spectral Bands

Pelican satellites have two spectral band configurations: Pelican-2 and Generation-1 Pelican (Pelican-3 through Pelican-10). The table below presents both sets of bands side-by-side for easy comparison. Pelican Generation 2 satellites will have the same band configurations as Generation 1.

| Band | Pelican-2 (nm) | Gen-1 and Gen-2 Pelicans (nm) (Pelican-3+) |

|---|---|---|

| Panchromatic | 450 – 800 | 450 – 800 |

| Blue | 450 – 520 | 465 – 518 |

| Green | 520 – 590 | 547 – 585 |

| Red | 630 – 690 | 650 – 682 |

| Red Edge / RE-I | 693 – 715 | 699 – 716 |

| Red Edge II | 729 – 751 | — |

| NIR | 770 – 890 | — |

| NIR Wide | — | 779 – 885 |

| NIR Narrow | — | 846 – 887 |

Pelican Imagery Products

Pelican products are available for search and download via the Planet APIs, User Interfaces, and integrations. Pelican imagery is delivered in the form of Scene products, which are encoded in the Planet Platform as a set of Item Types and Asset Types. All Pelican imagery is collected as continuous strips using a line-scan sensor and then segmented into framed Scenes for processing and delivery.

Pelican supports multiple radiometric and geometric processing levels, including Level 1A (minimally processed panchromatic), Level 1B Basic (radiometrically and sensor-corrected), and Level 3B Ortho (fully orthorectified radiance, surface reflectance, pansharpened, and visual products). These products are interoperable with Planet’s high-resolution SkySat offerings while improving upon image quality, artifact reduction, and delivery speed.

Item Types

A Pelican Scene Product is an individual framed segment extracted from a Pelican imaging strip. Each strip is captured with a dual-panchromatic + multispectral line-scan system and then divided into Scenes for processing. Pelican Scenes typically cover an 8 km swath width and vary in length depending on the tasking mode and imaging geometry.

Pelican Scenes are represented in the Planet Platform as the PelicanScene item type. Each Scene includes:

- Panchromatic and multispectral imagery

- Geometry footprint and acquisition metadata

- UDM2 (Usable Data Mask) for cloud, haze, shadow, snow/ice, and unusable pixels

- RPC files for Basic products

- Projection and radiometric metadata for Ortho products

Pelican does not include a Collect product type, equivalent to SkySatCollect, as PelicanScenes do not require scene to scene mosaic as SkySatScenes do.

Imagery Asset Types

Pelican Scene products are available for download in the form of imagery assets. Multiple asset types are made available for Pelican Scenes, each with differences in radiometric processing, geometric correction, and spectral content. Asset type availability varies by processing level.

L1A Panchromatic (l1a_panchromatic) assets are minimally processed, non-orthorectified panchromatic imagery products. These products include raw-mapped detector values with accompanying calibration coefficients and are designed for advanced users requiring earliest access or custom radiometric workflows.

Basic Panchromatic (basic_panchromatic) assets are non-orthorectified, calibrated panchromatic imagery products. They include Time Delay Integration (TDI) corrections and are transformed to scaled Top of Atmosphere Radiance. These products are designed for data science and analytic workflows that benefit from Pelican’s wide (450–800 nm) panchromatic band and include associated rational polynomial coefficients (RPCs) for user-driven orthorectification.

Basic Analytic (basic_analytic) assets are non-orthorectified, calibrated multispectral imagery products. They contain Pelican’s six spectral bands at native multispectral resolution and are transformed to scaled Top of Atmosphere Radiance. These products are intended for analytic applications requiring spectral fidelity and for users who wish to geometrically correct the imagery themselves using RPCs.

Ortho Panchromatic (ortho_panchromatic) assets are orthorectified, calibrated panchromatic imagery products resampled to a uniform 50 cm pixel size. These products provide high-resolution detail suitable for geospatial analysis, mapping, and applications requiring accurate cartographic projection.

Ortho Analytic (ortho_analytic) assets are orthorectified, calibrated multispectral imagery products transformed to Top of Atmosphere Radiance and sampled to 50 cm for consistency. They are intended for workflows requiring accurate geolocation and multispectral analysis.

Ortho Analytic Surface Reflectance (ortho_analytic_sr) assets are corrected for the effects of the Earth’s atmosphere, accounting for the molecular composition and variation with altitude along with aerosol content. Combining the use of standard atmospheric models with the use of MODIS or VIIRS for water vapor, ozone and aerosol data, this provides reliable and consistent surface reflectance scenes over Planet’s varied constellation of satellites as part of our normal, on-demand data pipeline.

Ortho Pansharpened (ortho_pansharpened) assets are orthorectified multispectral imagery sharpened using Pelican’s oversampled dual-panchromatic channels to achieve 50 cm resolution. These products are designed for multispectral applications requiring the highest available spatial detail combined with spectral richness.

Ortho Visual (ortho_visual) assets are orthorectified, color-corrected RGB imagery optimized for visualization. Lower-resolution multispectral bands are pansharpened to 50 cm using Pelican’s dual-panchromatic imagery. These products are intended for direct visual inspection and easy ingestion into GIS platforms.

UDM2 (*_udm2) assets are Usable Data Masks accompanying both Basic and Ortho products. UDM2 identifies clear, cloud, haze, shadow, snow/ice, confidence, and unusable pixels, enabling users to filter or mask portions of the Scene.

Pelican’s full product specifications can be found in the Pelican User Documentation.

Product Naming

Pelican image products follow two naming conventions depending on whether the file is a full Scene or a trimmed deliverable produced as part of task fulfillment. Both conventions provide globally unique identifiers for acquisition time, satellite, and asset type.

Full Scene Image Filename Convention

Full Pelican Scene products use the following naming pattern:

<acquisition date>_<acquisition time>_<satellite_id>_<imageAsset>.<extension>

Examples:

20250305_143613_03_3009_Analytic.tif

20250305_143613_03_3009_Visual.tif

This convention applies to all complete Pelican Scenes generated directly from the imaging strip before trimming or task‑specific processing.

Trimmed Imagery Folder Naming Convention

Trimmed assets, delivered for task fulfillment, include an additional unique capture identifier in the folder name:

<acquisition date>_<acquisition time>_<satellite_id>_<unique-capture-ID>

Example:

20250305_143613_03_3009_3a44e78a-de6d-4d4b-b199-8f142deb10dd

Processing

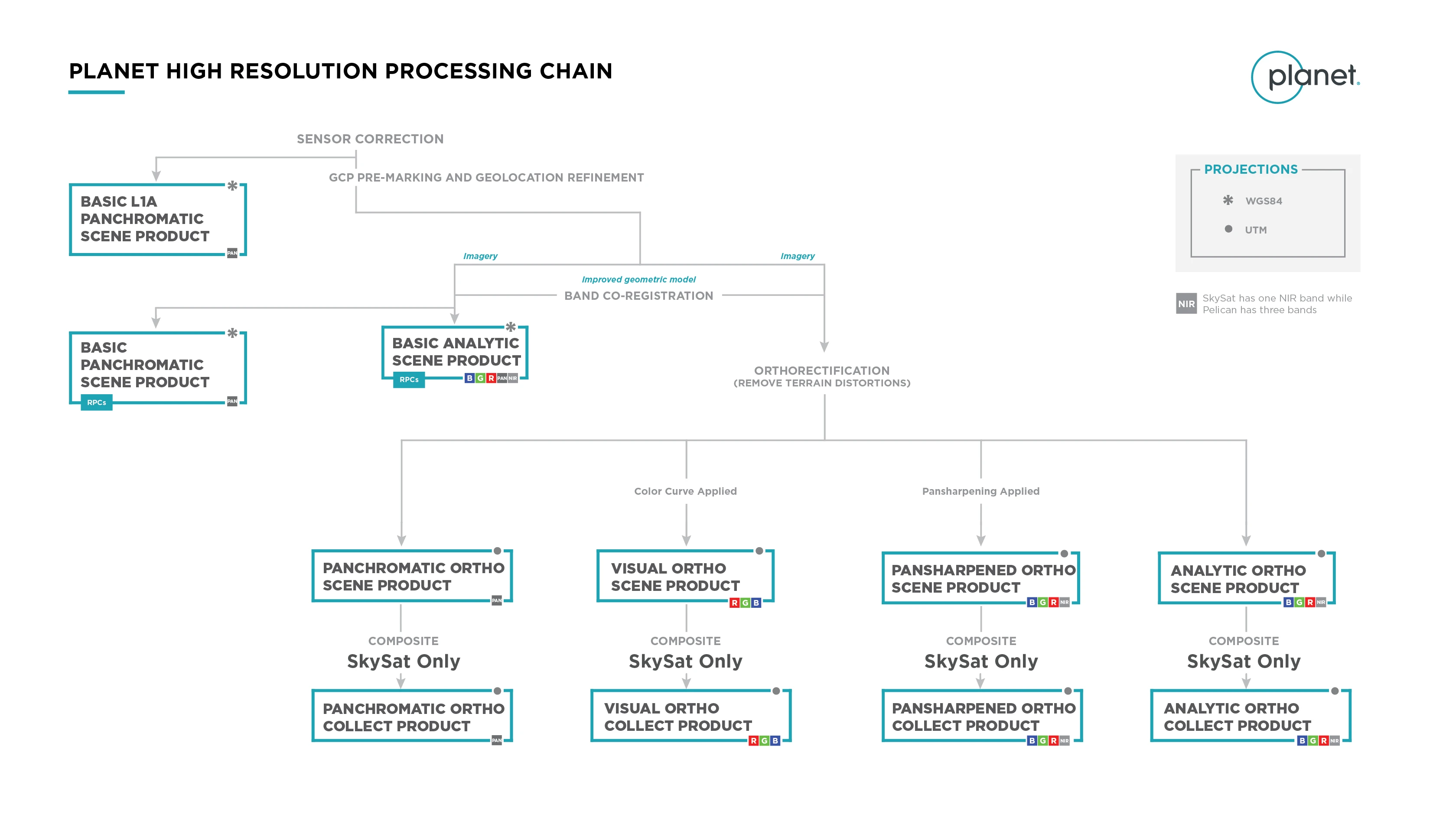

Several processing steps are applied to Pelican imagery to produce the complete set of Pelican Scene products available for download.

Sensor & Radiometric Calibration

Sensor

Darkfield/Offset Correction: Corrects for sensor bias and dark noise. On-orbit calibration collects are captured with the same operational settings during eclipse to yield faithful representative darkfield images. Darkfield images are updated periodically for all bands.

Flat Field Correction: Flat fields are created from on-orbit imagery taken post-launch. These fields are used to correct sensor non-uniformities. Flat field images are updated periodically for all bands.

Band Co‑Registration: Aligns Pelican’s dual panchromatic channels and six multispectral bands using an improved geometric model, ensuring pixel‑accurate spectral alignment across the stack.

Radiometric

Absolute Radiometric Calibration: Converts detector digital number (DN) measurements into physical radiance units (W/(m²·sr·µm)) using calibration coefficients derived from laboratory and on‑orbit calibration.

Atmospheric Correction: Surface reflectance is determined from Top of Atmosphere (TOA) reflectance, calculated using coefficients supplied with the Planet Radiance product. The Planet Surface Reflectance product corrects for the effects of the Earth's atmosphere, accounting for the molecular composition and variation with altitude along with aerosol content. Combining the use of standard atmospheric models with the use of satellite water vapor, ozone and aerosol data, this provides reliable and consistent surface reflectance scenes over Planet's varied constellations of satellites as part of our normal, on-demand data pipeline. However, there are some limitations to the corrections performed:

- In some instances, there is no satellite data overlapping a Planet scene or the area nearby. In those cases, AOD is set to a value of 0.226 which corresponds to a “clear sky” visibility of 23 km, the aot_quality is set to the satellite “no data” value of 127, and aot_status is set to ‘Missing Data - Using Default AOT’. If there is no overlapping water vapor or ozone data, the correction falls back to a predefined 6SV internal model.

- The effects of haze and thin cirrus clouds are not corrected for.

- Aerosol type is limited to a single, global model.

- All scenes are assumed to be at sea level, and the surfaces are assumed to exhibit Lambertian scattering - no BRDF effects are accounted for.

- Stray light and adjacency effects are not corrected for.

Visual

Visual Product Processing

Pelican Visual products present imagery as natural color, optimized for human interpretation. Processing includes:

- Color Curve Application: A custom color profile is applied to enhance visual clarity and alignment with natural appearance.

- Pansharpening: Lower‑resolution multispectral bands are fused with Pelican's dual‑panchromatic channels to produce a 50 cm RGB product.

- Post‑Processing Enhancements: Final tuning steps to ensure consistent tone and contrast across the continuous sequence of images captured by the satellite during a single pass over an area. We do not guarantee visual consistency between non-continuous sequences of images, nor across independent passes over an area.

Geometric Processing

Pelican imagery undergoes a multistep georectification process to ensure positional and geometric accuracy meets Planet’s quality standards. This process transforms the raw satellite imagery into a map-ready product. Planet image products are offered with different levels of processing to correct for sensor and geometric distortions which include the following product levels:

- Basic Products: These are sensor-corrected and geometrically aligned. Basic products are georeferenced to improve positional accuracy beyond what telemetry alone provides, but they are not orthorectified or corrected for terrain distortions.

- Ortho Products: In addition to the corrections applied to Basic products, Ortho products account for terrain distortion. Imagery is orthorectified and projected onto a 50 cm grid using a digital elevation model (DEM) to accurately model local terrain. Ortho products utilize the UTM WGS84 map projection

Geometric Processing Workflow

Both Basic and Ortho products are generated from a rigorous sensor model refined through these steps:

-

Initial Pointing Estimate: Satellite telemetry provides the initial pointing estimate (typically 75–100 m accuracy). Corrections for boresight and optical distortion are applied within a physical camera model.

-

Refined Pointing with Global Reference: Ground control tie points from the panchromatic band are matched against global reference imagery. This refines the satellite attitude and corrects low‑frequency satellite motions.

-

Band Alignment and Fine Refinement: The remaining panchromatic and multispectral bands are rectified to the initial panchromatic band. This spatially aligns the bands and further refines the camera's attitude, removing higher-frequency satellite motions.

After refinement, the updated physical camera model is used to generate final outputs:

-

Basic Products: Bands are aligned within the image plane. Rational Polynomial Coefficients (RPCs) are included for users who want to perform their own orthorectification. RPCs approximate the full camera model but may not be continuous across adjacent tiles.

-

Ortho Scene Products: Imagery is projected and resampled onto a fixed 50 cm grid using the refined camera model and a reference DEM.

Geometric Processing Accuracy

The positional accuracy of Planet imagery is contingent upon the efficacy of our multi-step georectification process. Variations in image characteristics and the availability of geodetic control influence the final geospatial accuracy.

Positional Accuracy in the Absence of Ground Control

Imagery acquired under conditions that preclude the extraction of sufficient ground control tie points (GCPs) will exhibit increased positional uncertainty. In such cases, the rigorous physical camera model will necessarily revert to parameter estimations derived predominantly from the initial satellite telemetry data. This results in a nominal absolute geospatial accuracy of 75 to 100 meters circular error 90% (CE90). Conditions contributing to this scenario include, but are not limited to:

- Significant cloud obscuration: Extensive cloud cover prevents line-of-sight to ground features essential for GCP extraction.

- Dominant water bodies: Large water features within a scene lack stable, distinct, and identifiable georeferencing points.

- Dynamic or homogenous terrain: Regions undergoing rapid geomorphological change, or those characterized by uniform, featureless surfaces (for example, snow-covered expanses), impede reliable GCP generation.

- Imagery relying solely on telemetry-derived positional estimates can be identified in the product metadata where the "Ground Control" field will be explicitly set to "FALSE".

Influence of Reference Data Fidelity

The absolute geospatial accuracy of Planet's derived image products is directly impacted by the inherent accuracy of the global reference imagery datasets utilized in our georectification pipeline.

Specific details regarding the absolute accuracy of the reference data are systematically reported in Planet's quarterly Image Quality Reports (IQRs). For example, during Q1 through Q2, Planet's primary reference imagery demonstrated an absolute accuracy of 5 meters CE90 across the majority of locations characterized by terrain slopes less than 20 degrees. This CE90 metric signifies that 90% of the measured horizontal errors are expected to be within a 5-meter radius of the true ground position.

Users may observe a localized degradation in absolute positional accuracy in geographic regions exhibiting:

- Rapid Urban Development or Significant New Construction: Recent anthropogenic modifications may not be fully represented in the current reference data.

- Pronounced Topographical Changes: Areas with dynamic geomorphic processes (for example, landslides, erosion) can lead to discrepancies.

- High Terrain Slopes: Steep slopes inherently introduce greater geometric distortion and can challenge precise orthorectification, potentially reducing positional accuracy even with an accurate digital elevation model (DEM).

Planet updates reference data to mitigate these factors and maintain optimal geospatial processing fidelity. The current DEM and reference imagery accuracy used for orthorectification is detailed in the relevant Image Quality Reports.

Miscellaneous

Rational Polynomial Coefficients (RPCS)

For Pelican Basic Imagery, Planet supplies Rational Polynomial Coefficients (RPCs) similar to SkySat and PlanetScope. RPCs provide a mathematical relationship that maps the 3D ground coordinates (latitude, longitude, elevation) to 2D image coordinates (row, column pixels) and vice-versa. This is essential for accurately geolocating features within an image and for removing geometric distortions caused by sensor geometry, terrain variations, and satellite attitude during image acquisition.

RPCs are ratios of polynomials that approximate a physical sensor model. They consist of a set of coefficients (numerators and denominators) for both forward (ground to image) and inverse (image to ground) transformations. These coefficients are derived as part of the Geometric processing.

The RPCs are an approximation to a rigorous physical sensor model that Planet uses to generate Pelican Orthorectified image products. As such, users who use RPCs may find that their generated ortho image does not match Planet’s orthorectified imagery exactly. Currently, the RPCs are generated for individual tiles and discontinuities in the solution may occur at tile boundaries.

RPCs are supplied with Basic products and designed for users with advanced image processing and geometric correction capabilities. Basic products are not orthorectified or corrected for terrain distortions by Planet. With accompanying RPC files users can perform these corrections themselves using standard GIS tools. Users will need an elevation model (for example, a Digital Elevation Model) to correctly orthorectify this product. For Pelican, the RPC files are supplied as text (.TXT) files. The following table describes the RPC file format. The RPCs are also available in the Tiff header for the Basic product.

Pelican NITF 2.1 Image Product

Planet can produce imagery in the National Imagery Transmission Format (NITF 2.1) compliant with MIL-STD-2500C and guidelines provided by the United States National Geospatial Intelligence Agency (NGA). The NITF provided for Pelican imagery includes the image itself, meta data, and the UDM mask. It includes a generic linear array scanner (GLAS) model in a standard format which allows for users to create orthorectified products from the imagery using a digital elevation model. The GFM is an approximation to the full physical model used by Planet. More information on the GLAS can be found in Theiss, ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Volume V-1-2020. Full information on the MIL-STD-2500C standards is available through the United States NITF Technical board.

NITF for Pelican implements a Generic Linear Array Scanner (GLAS) as its geometric sensor model because Pelican is a linear scanner. As such, Pelican produces a long continuous image which we segment into chunks, rather than a mosaic of images. Further, GLAS is a generic physical sensor model that applies to images that retain their original perspective geometry and have not been warped to fit some other geospatial data.

Automated Delivery

For Pelican, users may order their preferred image type and indicate a delivery location at time of tasking by using Planet’s Automated Delivery feature. This feature will automatically activate the generation of the user’s requested image asset as part of the image processing chain. Delivery is also automatically initiated once the asset’s imagery has been generated. This decreases the number of steps a user needs to begin using their tasked imagery. More information on how to configure and use the Automated Delivery features can be found in the documentation at the Planet Documentation site for Automated Delivery. Additional imaging assets can be ordered using our standard ordering process or by retriggering the automated delivery

Trimming

By default, Pelican imagery captured to fulfill a tasking request will be delivered trimmed to the tasked AOI. In the case of point targets, a 5 km by 5 km image will be provided. Trimming allows Planet to streamline the delivery process, improving the time to deliver imagery after capture. Additionally, trimming reduces the storage needs for users who choose to download imagery into their own storage locations. The delivered trimmed imagery is only available to the organization that originally tasked the image. Full Pelican Scenes may be obtained from the Planet Archive. Archive imagery may also be trimmed to an AOI by requesting clipping at time of ordering.

Unusable Data Mask (UDM) File

The UDM file is a standard deliverable included in many Pelican scene order types. It provides a spatially corresponding array identifying areas of unusable data within the associated image. The UDM identifies pixels that are impacted by cloud cover, snow, and shadows or indicate non-imaged regions Only UDM2 is supported for Pelican. Users can learn more about the process used to label pixels in the UDM process at the Planet Documentation site for UDM2.

The UDM is delivered in a TIF format. Each 8-bit pixel within the UDM2 array utilizes individual bits to specify the utility of the corresponding image data:

- Band 1: clear mask (a value of “1” indicates the pixel is clear, a value of “0” indicates that the pixel is not clear and is one of the 5 remaining classes below)

- Band 2: snow mask

- Band 3: shadow mask

- Band 4: light haze mask

- Band 5: heavy haze mask (all images acquired after November 29, 2023 will have a value of “0” in band 5)

- Band 6: cloud mask

- Band 7: confidence map (a value of “0” indicates a low confidence in the assigned classification, a value of “100” indicates a high confidence in the assigned classification)

- Band 8: unusable data mask